Introducing SQLCoder2 and SQLCoder-7B

Today, we are thrilled to open-source our SQLCoder2 and SQLCoder-7B models. The SQLCoder2 model represents a significant improvement from the original SQLCoder - released in August. And SQLCoder-7B is our first 7B model, with nearly the same performance as SQLCoder2.

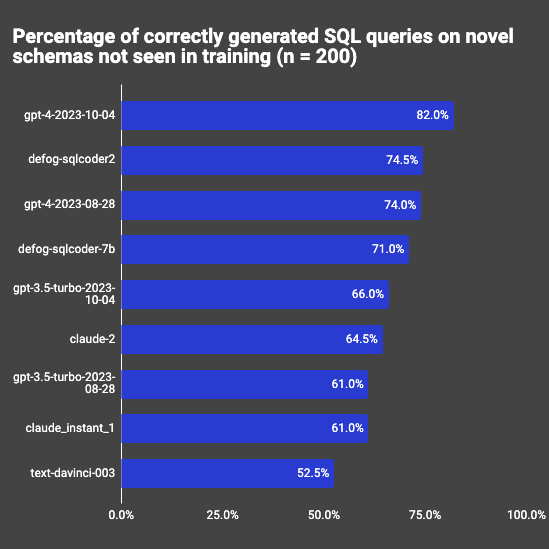

On our open-source evaluation framework, SQLCoder outperforms every available LLM except GPT4 on novel schemas not seen in training. When finetuned on a specific schema, it outperforms all models, including GPT-4.

You can explore SQLCoder2 in our interactive demo at https://defog.ai/sqlcoder-demo.

SQLCoder2 is a 15B parameter LLM, and a fine-tuned implementation of StarCoder. SQLCoder-7B is a finetuned implementation of the Mistral-7B model. Both of these models have been fine-tuned on hand-crafted SQL queries in increasing orders of difficulty.

You can find our Github repo here, and our model weights on Huggingface here. You can also use our interactive demo here to explore our model in the browser.

Motivation

Since our release of SQLCoder, we have seen nearly 20,000 downloads of the model weights on Huggingface and more than 3000 signups for the cloud hosted version of SQLCoder. We realized 2 things based on user feedback: the original SQLCoder struggled with datetime functions, and sometimes hallucinated column names or table names.

We also saw hunger in the community for a smaller models that could be deployed on smaller GPUs, instead of a 15B parameter model that required 30GB of more of GPU VRAM to run with float16 weights.

SQLCoder2 and SQLCoder-7B fix both these problems head on, and are much more suited for production use-cases.

Approach

We largely worked with the same approach as the original SQLCoder model, but made a number of changes in the training process and while curating data. You can read more about those changes here.

Results

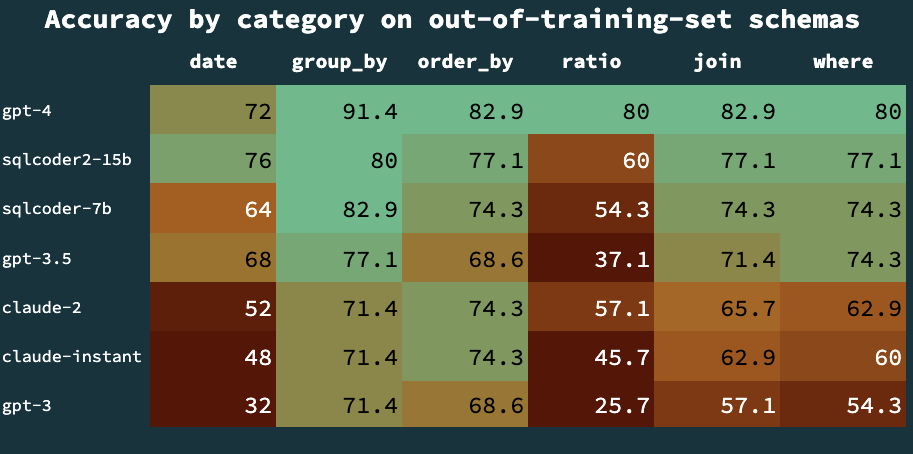

SQLCoder2 and SQLCoder-7b perform well across all categories – beating out all models except GPT-4 for out-of-training-set schemas. When these models are fine-tuned on a particular schema, results are significantly better than GPT-4.

Explore the model

You can explore our model at https://defog.ai/sqlcoder-demo using a UI, or at

About the authors

Wong Jing Ping is a Software Engineer at Defog. He works on building Defog’s Machine Learning and engineering stack. Before joining Defog, he worked on account and tweet recommendations at Twitter for six years. Outside of work, he enjoys recreational climbing and perfecting that single-origin pourover 👌🏻 He also builds small keyboards.

Wendy Aw is a Machine Learning Engineer at Defog, working on model-finetuning and dataset curation. Before joining Defog, Wendy spent most of the last decade as a copywriter, where she helped build some of the world’s biggest brands.

Rishabh Srivastava is a co-founder of Defog. Before starting Defog, he founded Loki.ai – serving more than 5 billion API requests for Asian enterprises.

← More blogs